This blog post is about upgrading vRealize Orchestrator 8.X to a newer version. After a couple of vRealize Orchestrator Upgrades since the 8.0 release and getting stuck a couple of times I decided to do a simple write-up with some tips and tricks.

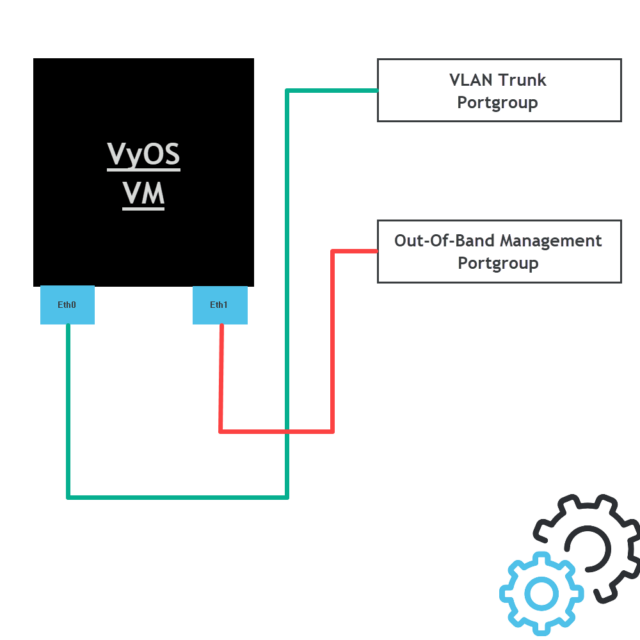

In my lab environment, I have got multiple orchestrators running embedded, standalone, and cluster. Most issues I encountered are related to the standalone version that is connected with the VMware vCenter Server.

vRO upgrade checks

Let’s start with some simple upgrade checks to make sure everything is working before the upgrade and to improve the chance of succeeding.

- Make sure the root account is not expired on all nodes in the cluster.

- Make sure you have the correct vCenter SSO password. Verify this by logging in with administrator@vsphere.local on the vCenter Server. The password is required for the standalone upgrade that is directly connected to the VMware vCenter Server.

- Make sure the time sync is working on all the nodes in the cluster.

vRO upgrade

Let’s start with the vRealize Orchestrator Upgrade. Here is an overview of the procedure and the commands required to perform the upgrade.

Keep in mind: Step six is optional and is only required for the vRealize Orchestrator that is connected to the vCenter SSO. For the vRealize Automation connected upgrade, this step can be skipped.

Procedure:

- Create a virtual machine snapshot.

- Open an SSH session with the vRealize Orchestrator node.

- Login with the root account on the vRealize Orchestrator node.

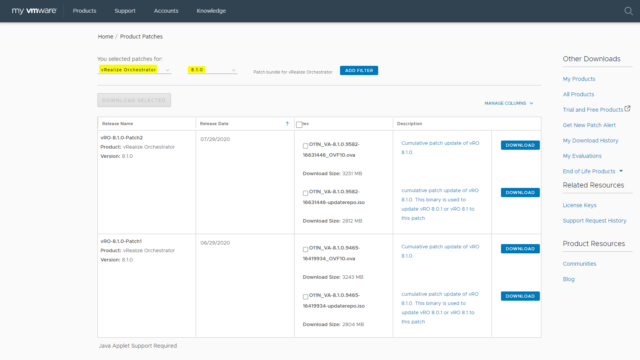

- Mount the upgrade media to the virtual machine.

- Mount the media in the linux system (mount /dev/sr0 /mnt/cdrom).

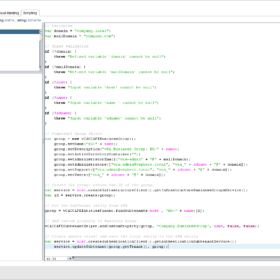

- Enter the SSO password as a variable in the shell (export VRO_SSO_PASSWORD=your_sso_password).

- Start the upgrade (vracli upgrade exec -y –profile lcm –repo cdrom://).

- The upgrade will start. Depending on the size of the vRealize Orchestrator node it will take between 30 to 90 minutes.

- After the upgrade is completed restart the system (reboot).

- Verification:

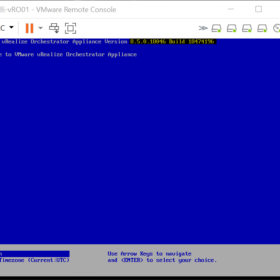

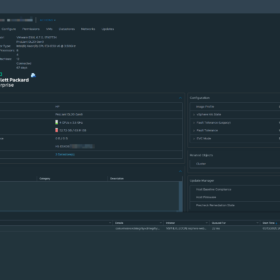

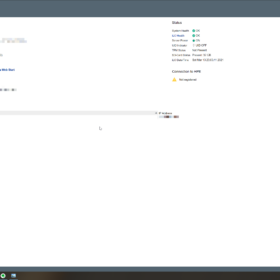

- Check the virtual machine console for startup issues. Make sure the console is displaying a blue screen with information about the node.

- Check the virtual machine console for the version/build number on the blue screen that it is displaying.

- Check if the web interface is available and the interface is working.

- Login into the vRO interface and verify that authentication is working.

- Run a basic workflow.

- Remove the virtual machine snapshot.

Screenshot(s)

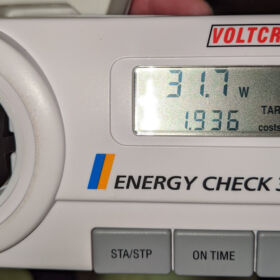

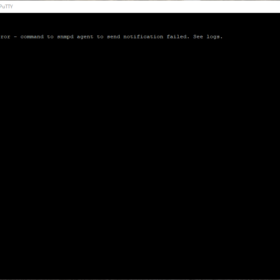

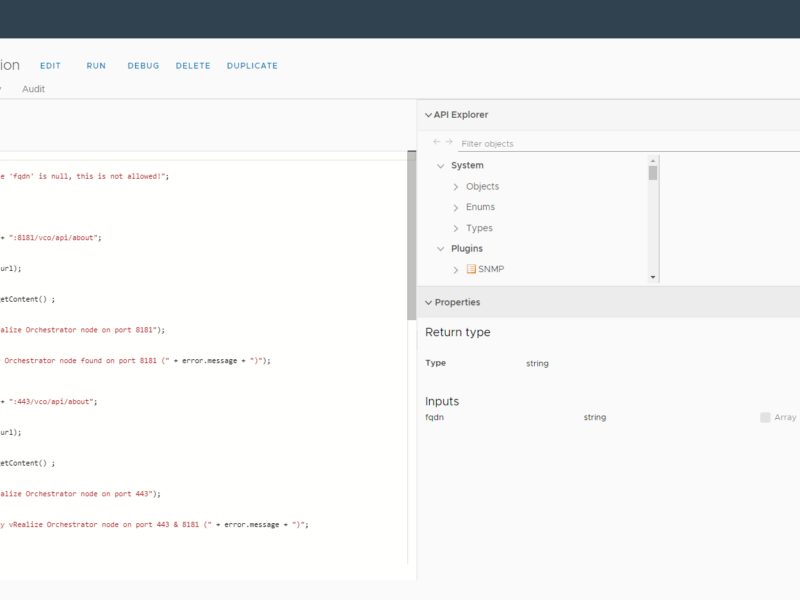

Here are a couple of screenshots of the upgrade process and the end result after a successful upgrade:

Summary

So that was my short blog post about the vRealize Orchestrator Upgrade experience so far for version 8.X. I hope it was useful. In most cases, there were problems with an expired account or an incorrect SSO password.

It would be nice if the upgrade process would validate the entered SSO password instead of hanging for hours in a crashed upgrade state without returning any error message to the console or shell session.

Thanks for reading and see you next time! Please respond in the comment section below if you got any remarks :).

Official documentation: