This blog post is about replacing my current 24×7 Lab with a new set of two HPE ProLiant DL20 Gen9 servers. In this blog post, I am going to tell you about the configuration of the machines and how they are running on VMware ESXi. Also, I am going to compare them to my other lab hardware and my past home lab equipment.

Hardware

So let’s kick off with the hardware! The HPE DL20 Gen 9 servers I bought were both new in the box from eBay and I changed the hardware components to my own liking.

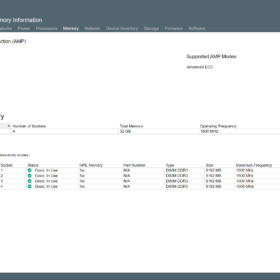

A couple of interesting points I learned so far nearly all servers that you will find for sale are provided with an Intel Xeon E3-12XX v5 processor. One item you need to take into account: yes you can swap the CPU from a v5 to a v6 like I did but you need to replace the memory modules also! The memory modules are compatible with a v5 or v6 processor but not both ways. The Intel Xeon E3-12XX v5 CPUs are using 2133 MHz memory and the Intel Xeon E3-12XX v6 CPUs are using 2400 MHz memory. So keep that in mind when swapping the processor and/or buying memory.

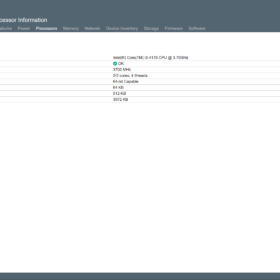

In the end, after some swapping of components, I ended up with the following configuration. Both ProLiant servers have an equal configuration (like it should be in a vSphere cluster):

| Component | Item |

|---|---|

| Vendor: | HPE |

| Model: | DL20 Gen9 |

| CPU: | Intel® Xeon® Processor E3-1230 v6 |

| Memory: | 64GB DDR4 ECC (4 x 16GB UDIMM @2400MHz) |

| Storage: | 32GB SD card on the motherboard |

| Storage controller: | All disabled |

| Network card(s): | HPE Ethernet 1Gb 2-port 332i Network Adapter |

| Expansion card(s): | HPE 361T Dual-Port 2x Gigabit-LAN PCIe x4 |

| Rackmount kit: | HPE 1U Short Friction Rail Kit |

Power usage

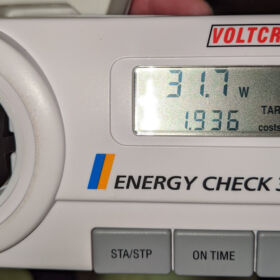

So far I have measured the power usage of the machines individually with the listed configuration in the hardware section. When measuring the power usage the machine was running VMware ESXi and on top of about seven virtual machines that were using about 30% of the total compacity. I was quite amazed by the low power consumption of 31.7 watts per host but I have to take into account that this is only the compute part! The hosts are not responsible for storage. Here is a photo of my power meter when performing the test:

Screenshot(s)

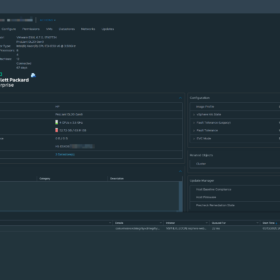

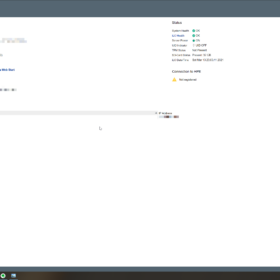

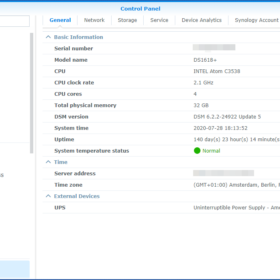

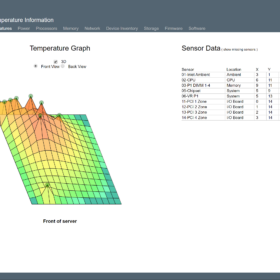

Here are some screenshot(s) of the servers running in my home lab environment and running some virtual machine workload:

- Screenshot 01: Is displaying one of the hosts running VMware ESXi 6.7 (screenshot from HPE iLO).

- Screenshot 02: Is displaying one of the hosts connected to VMware vCenter and running virtual machines.

- Screenshot 03: Is displaying one of the hosts HPE iLO web page.

Positives & Negatives

To sum up, my experience I have created a list of positives and negatives to give you some insight into the HPE ProLiant DL20 Gen9 as a home lab server.

Positives:

- A lot of CPU power compared to my previous ESXi hosts, link to the previous setup.

- Rack-mounted servers (half-size deep with sliding rails).

- Out of band management by default (HPE iLO).

- Power usage is good for the amount of compute power delivered.

- No additional drivers are required for VMware ESXi to run.

- The HPE DL20 Gen9 has been on the VMware HCL, link.

Negatives:

- Noisy compared to my previous setup (HPE ProLiant ML10 Gen8). For comparison, the HPE ProLiant DL360 Gen8 is in most cases “quiet” compared to the HPE ProLiant DL20 Gen9.

- Would be nice if there was support for more memory because you can never have enough of that in a virtualization environment ;).

Photos

Here are some photos of the physical hardware and the internals, I did not take any pictures of the hardware when the components were all installed. I am sorry :(.

- Screenshot 01 – Is displaying both machines running and installed in the 19″ server rack.

- Screenshot 02 – Is displaying the internals of the DL20 Gen9. Keep in mind this one is empty. As you can see in that picture the chassis is just half-size!

Wrap-up

So that concludes my blog post. If you got additional questions or remarks please respond in the comment section below. Thanks for reading my blog post and see you next time.