On 11 January 2022 Microsoft released an update that puts some Microsoft Domain Controllers in a boot loop. This is related to Windows Server 2016, Windows Server 2019, and Windows Server 2022. When my lab environment was running into issues I noticed that I could not find the updates listed online. After some searching, I realized that Windows Server 2022 Core is different and has different updates installed.

In my environment, I am running two Windows Server 2022 Core edition domain controllers. On 19 January both domain controllers came into a boot loop at night after installing the updates automatically with my automated patching tools.

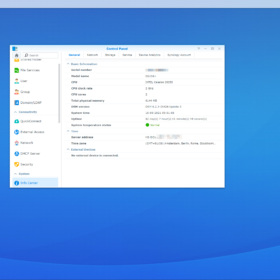

Server with Desktop Experience

As listed on the websites the following updates are the ones you need to delete from the system:

# Remove the updates

wusa /uninstall /kb:5009595 /quiet

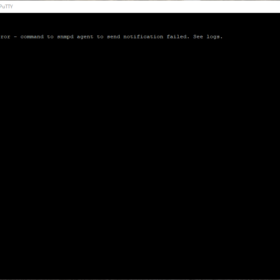

wusa /uninstall /kb:5009624 /quietWhen running the command the Windows Server 2022 domain controller told me that the updates were not installed on this system… So that was quite weird… after running the following command and checking the KB article description on the Microsoft website I found the correct one (the articles I am referring to are listed below).

# List installed updates on the machine

wmic qfe listServer Core

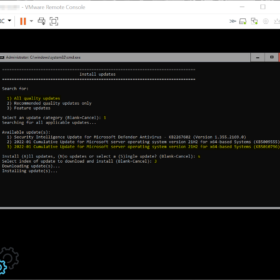

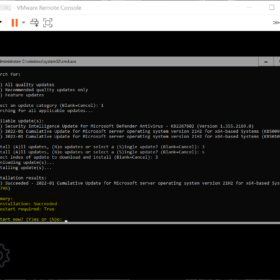

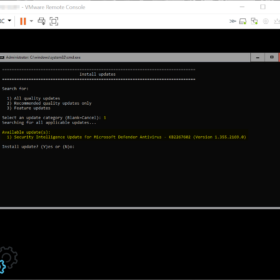

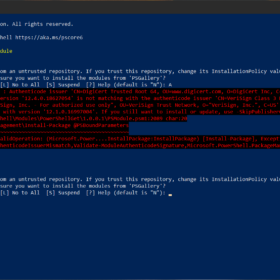

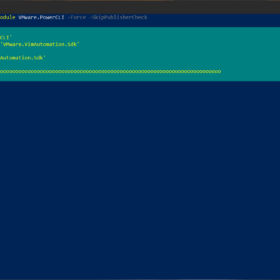

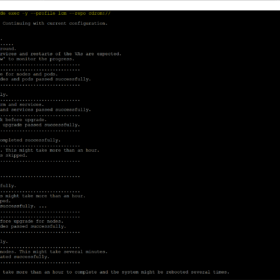

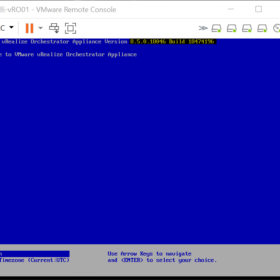

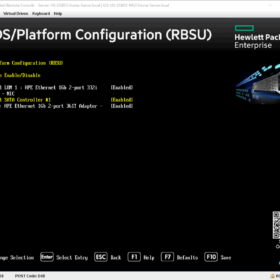

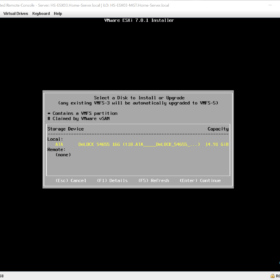

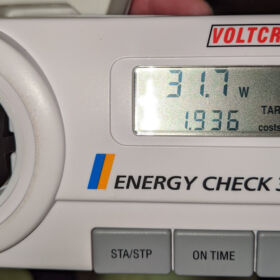

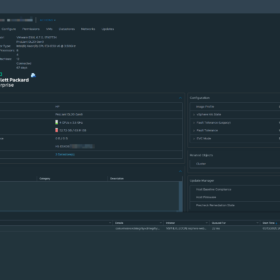

I have created a simple procedure and added some screenshots. Here is the procedure that I performed on each domain controller in my environment:

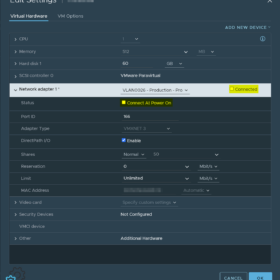

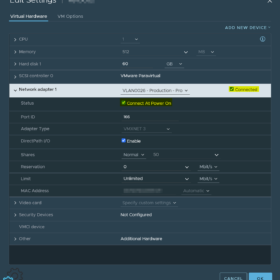

- Disconnect the virtual network card.

- Connect with the virtual machine console through the hypervisor.

- Log in with the administrator account.

- Open the PowerShell prompt.

- Remove the update, see the command below.

- Reboot the system.

- Connect the virtual network card.

- Log in with the administrator account.

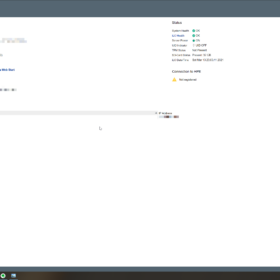

- Install update KB5010796.

- Reboot the system.

- Check the available updates to make sure it is installed.

- Everything should be working fine again.

# Remove the update (the quiet option was not working and the removal required mouse interaction to work)

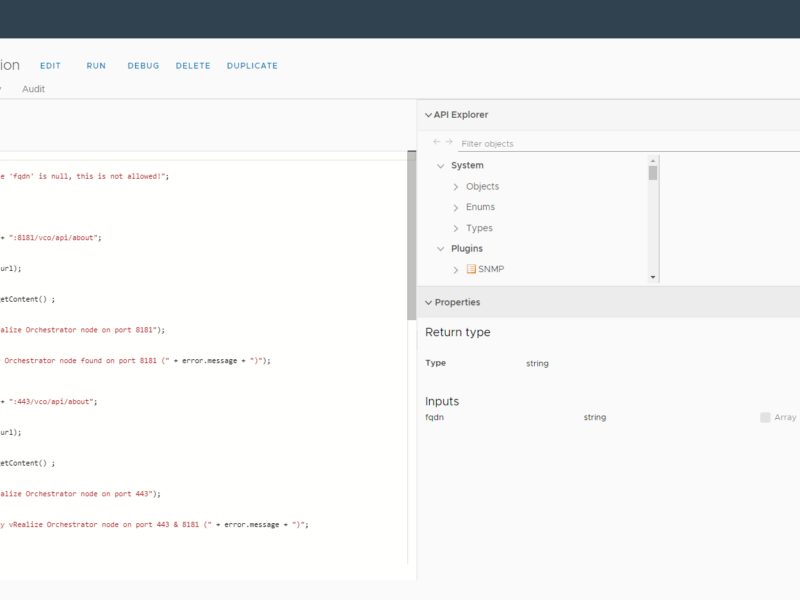

wusa /uninstall /kb:5009555Screenshot(s)

I kind of forgot to screenshot everything because of the time between the boot loops but the end result is captured on the screenshot(s). Also added the screenshots in VMware vCenter for disabling and enabling the network card connectivity.

Wrap-up

A couple of things I have learned so far, Windows Updates KB numbers are not identical between core and desktop experience (full) versions. It was strange that the domain controllers still listed the faulty update as the first update to install and would go into the same state back…

This wraps up the blog article hopefully it is useful for somebody, please respond below if you have any comments or additional information!