In the blog post were are going to automatically create Business Groups in vRealize Automation 7.X. This can be handy when a customer has a lot of Business Groups and adds additional Business Groups overtime. So it was time to write a little bit of code that makes my life easier.

I wrote it in the first place for using it in my lab environment to set up vRealize Automation 7.X quickly for testing deployments and validating use cases.

Advantages of orchestrating this task:

- Quicker

- Consistent

- History and settings are recorded in vRealize Orchestrator (vRO)

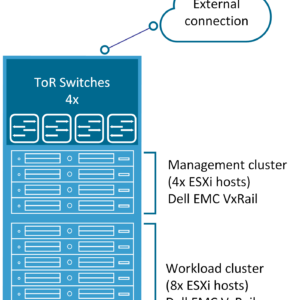

Environment

My environment where I am testing this vRO workflow is my Home Lab. At home, I have a Lab environment for testing and developing stuff. The only products you need for this workflow are:

- vRealize Automation 7.6 in short vRA.

- vRealize Orchestrator 7.6 in short vRO.

Note: The vRealize Automation endpoint must be registered to make it work.

vRealize Orchestrator Code

Here is all the information you need for creating the vRealize Orchestrator workflow:

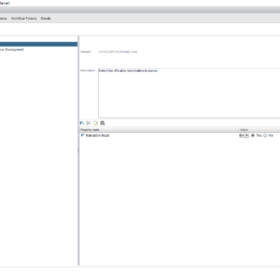

- Workflow Name: vRA 7.X – Create Business Group

- Version: 1.0

- Description: Creating a vRealize Automation 7.X Business Group in an automated way.

- Inputs:

- host (vCACCAFE:VCACHost)

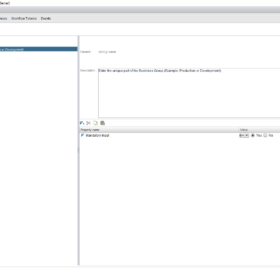

- name (string)

- adname (string)

- Outputs:

- None

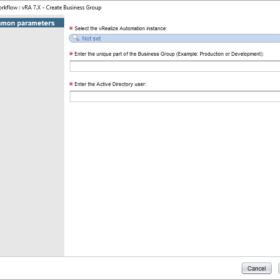

- Presentation:

- See the screenshots below.

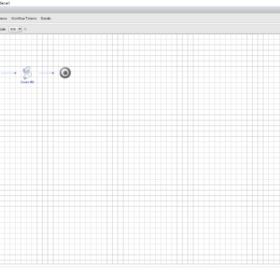

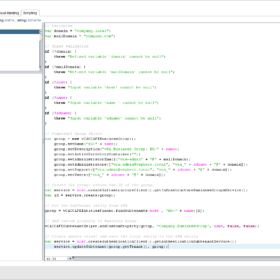

Here is the vRealize Orchestrator code in the Scriptable Task:

// Variables

var domain = "company.local";

var mailDomain = "company.com";

// Input validation

if (!domain) {

throw "Defined variable 'domain' cannot be null";

}

if (!mailDomain) {

throw "Defined variable 'mailDomain' cannot be null";

}

if (!host) {

throw "Input variable 'host' cannot be null";

}

if (!name) {

throw "Input variable 'name ' cannot be null";

}

if (!adname) {

throw "Input variable 'adname' cannot be null";

}

// Construct Group Object

var group = new vCACCAFEBusinessGroup();

group.setName("BG-" + name);

group.setDescription("vRA Business Group: BG-" + name);

group.setActiveDirectoryContainer("");

group.setAdministratorEmail("vra-admin" + "@" + mailDomain);

group.setAdministrators(["vra-admin@vsphere.local", "vra_" + adname + "@" + domain]);

group.setSupport(["vra-admin@vsphere.local", "vra_" + adname + "@" + domain]);

group.setUsers(["vra_" + adname + "@" + domain]);

// Create the group; return the ID of the group.

var service = host.createInfrastructureClient().getInfrastructureBusinessGroupsService();

var id = service.create(group);

// Get the SubTenant entity from vRA

group = vCACCAFEEntitiesFinder.findSubtenants(host , "BG-" + name)[0];

// Add custom property to Business Group

vCACCAFESubtenantHelper.addCustomProperty(group, "Company.BusinessGroup", name, false, false);

// Create update client and save the local entity to the vRA entity

var service = host.createAuthenticationClient().getAuthenticationSubtenantService();

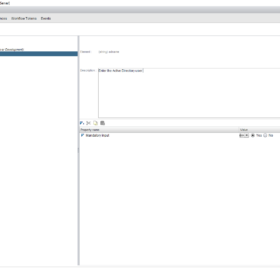

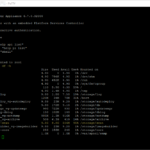

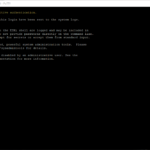

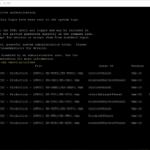

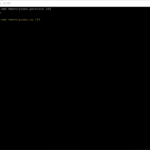

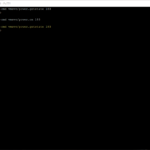

service.updateSubtenant(group.getTenant(), group);Screenshots

Here are some screenshot(s) of the Workflow configuration that helps you set up the workflow as I have done!

Wrap-up

This is a vRealize Orchestrator workflow example that I use in my home lab. It creates vRealize Automation Business Groups to improve consistency and speed.

Keep in mind: Every lab and customer is different. In this workflow I use for example the prefix BG- for Business Groups. What I am trying to say is modify it in a way that is bested suited for your environment.

Thanks for reading and if you have comments please respond below.