In this blog post, I am showing a simple vRealize Orchestrator action that receives information about vRealize Orchestrator nodes. This can also be used against remote nodes to compare orchestrator versions between different nodes. It displays the product version, product build, and API version.

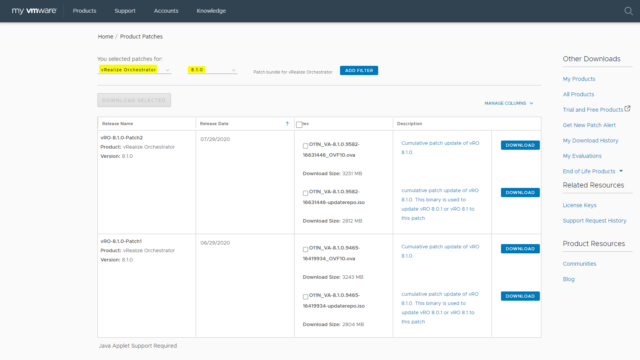

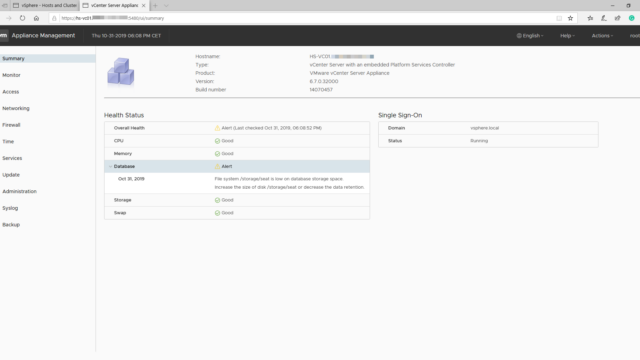

So why do you want to verify that? Lately, a hot topic surrounding the vRealize Orchestrator software is migrations. This is because most customers are moving away from version 7 to version 8 (here you see vRO 8.X in action). So as a VMware consultant, you run into questions from customers about compatibility and integration use cases.

Below I will share the code and a video about using the action. You mean workflow right? No since vRO 8.0 you can run the action directly you do not need a workflow around it.

Code explained

Some explanation about the action called “troubleshootVroVersion“:

- The action requires one input parameter that is called ‘fqdn’. Here you enter for example (vro.domain.local). This action detects which URL and port are required so it automatically supports the following scenarios:

- This can be a standalone node, an embedded node (inside vRA), the central load balancer in front of the nodes.

- There is support for the vRealize Orchestrator 7.X version and vRealize Orchestrator 8.X version.

- No authentication is required because the leveraged API page is publically available without authentication.

- The only port required between the Orchestrator that is executing the action and the remote Orchestrator is HTTPS TCP 443.

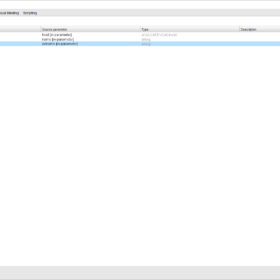

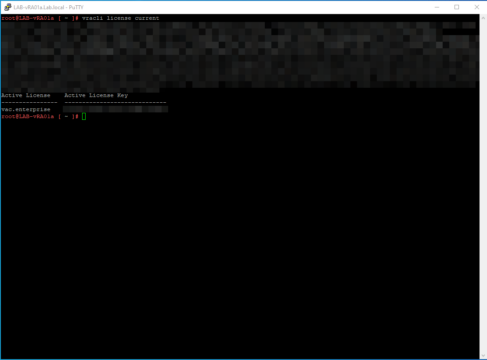

vRO Configuration

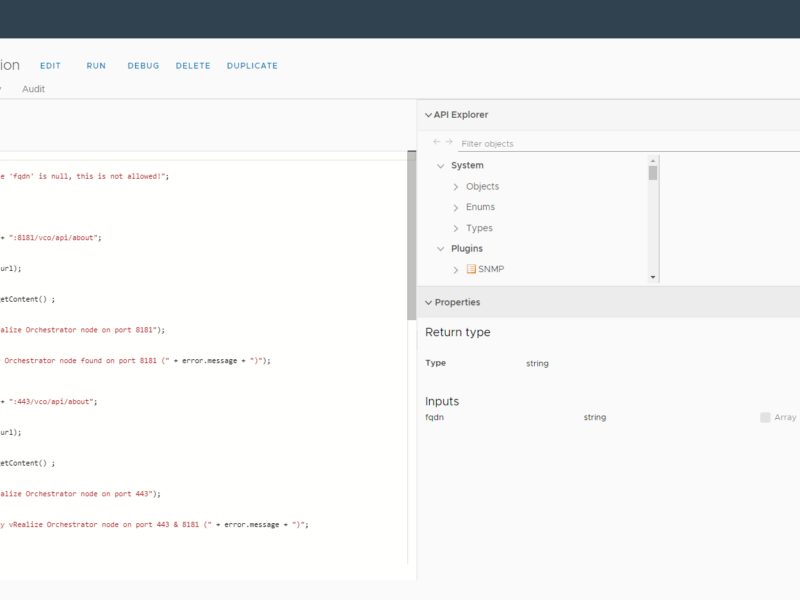

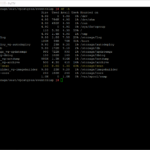

Here is an image of the configured vRO Action. You can see the input and return type configured. Also, you see the configured language that is used “JavaScript“.

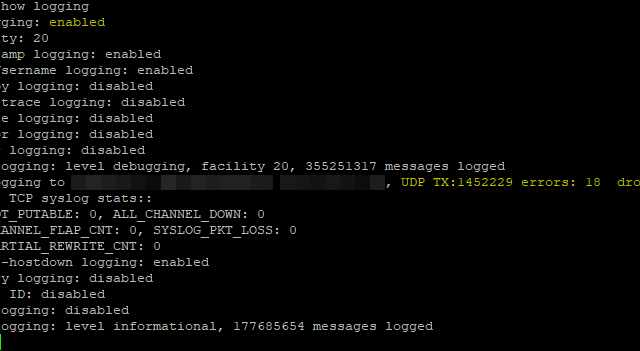

Video

I have created a recording of a vRealize Orchestrator node running the action against itself. This can also be done against a remote vRealize Orchestrator node as explained before. This might also help somebody to create the action on his own orchestrator.

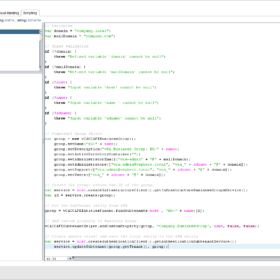

Code

Here is the code for the action and also the action configuration details for creating the action in vRealize Orchestrator:

// Input validation

if (!fqdn) {

throw "The input variable 'fqdn' is null, this is not allowed!";

}

// Determine vRO Port

try {

// Port 8181

url = "https://" + fqdn + ":8181/vco/api/about";

// Create URL object

var urlObject = new URL(url);

// Retrieve content

var result = urlObject.getContent() ;

// Message

System.log ("Found a vRealize Orchestrator node on port 8181");

}

catch (error) {

System.log ("No vRealize Orchestrator node found on port 8181 (" + error.message + ")");

}

try {

// Port 443

url = "https://" + fqdn + ":443/vco/api/about";

// Create URL object

var urlObject = new URL(url);

// Retrieve content

var result = urlObject.getContent() ;

// Message

System.log ("Found a vRealize Orchestrator node on port 443");

}

catch(error) {

throw "Could not find any vRealize Orchestrator node on port 443 & 8181 (" + error.message + ")";

}

// JSON Parse

try {

// Parse JSON data

var jsonObject = JSON.parse(result);

}

catch (error) {

throw "There is an issue with the JSON object (" + error.message + ")";

}

// Output data to screen

try {

System.log("===== " + fqdn + " =====");

System.log("Version: "+ jsonObject.version);

System.log("Build number: "+ jsonObject["build-number"]);

System.log("Build date: "+ jsonObject["build-date"]);

System.log("API Version: "+ jsonObject["api-version"]);

}

catch (error) {

throw "There is something wrong with the output, please verify the JSON input (" + error.message + ")";

}GIT

Here is the Git Repository related to the code as shown above. The action used in the blog post is called “troubleshootVroVersion.js” inside the Git repository that is available on this URL.

Wrap Up

So that is it for today. In this blog post, I showed you an action to retrieve quickly some information about the Orchestrator version. As you can see in the code it is using a JSON object that is retrieved from a URL. This code is because that part easily usable for other items. So happy coding in vRO and see you next time!