After a recent deployment in my Lab environment with a new vRealize Automation 8 installation I figured out that my NFR product license was about to expire within a week. So it was time to change the product key on my running environment. Here is a write-up to change the license in vRealize Automation 8 with a standard installation (standalone-node) that is running with an Enterprise license.

Keep in mind: as explained in the vRealize Automation 8 release notes you cannot change the version of the license “After configuring vRealize Automation with the Enterprise license, the system can not be re-configured to use the Advanced License.“.

Connecting with vRA8

Start a connection with the vRealize Automation 8 appliance to get shell access to the system. I like to use Putty but you can use any terminal emulator you prefer that supports SSH.

Procedure:

- Start a terminal emulator like Putty on your desktop.

- Connect with the FQDN/hostname of the vRealize Automation 8 Appliance.

- Login with the root account.

Viewing product license

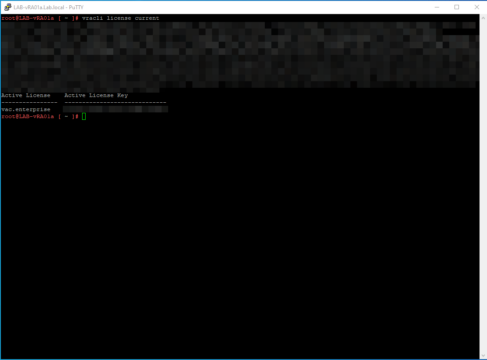

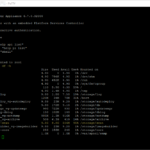

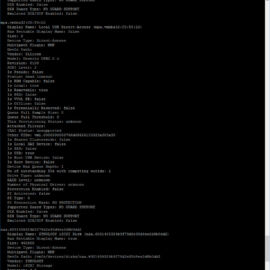

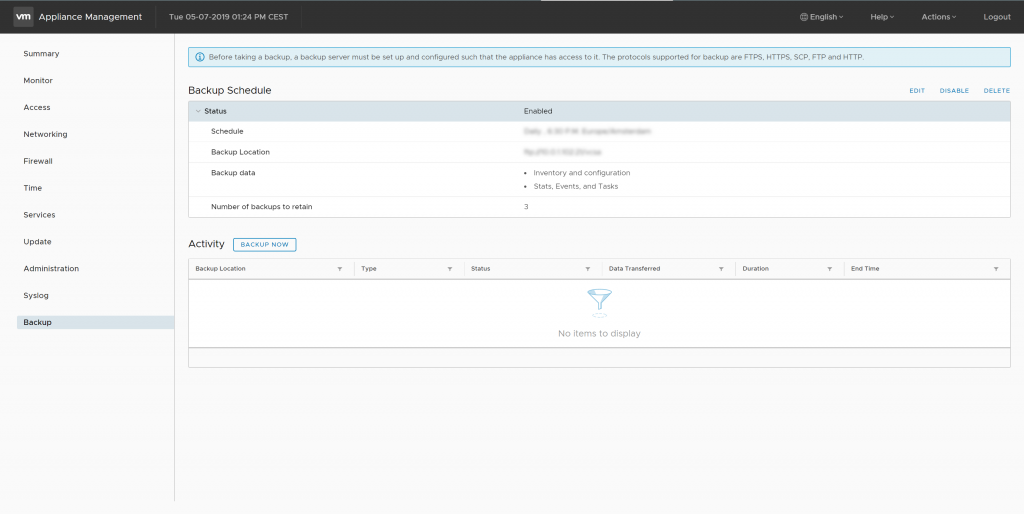

To validate the currently installed license key on the vRealize Automation 8 appliance you need to enter the following command “vracli license current“. Here can you find a screenshot of the output in my lab environment (keep in mind multiple lines are hidden):

Installing product license

To install a new license in vRA8 you need to perform some steps on the command line.

In this example we are changing the product license from one license key to the other:

- New license key: AAAAA-AAAAA-AAAAA-AAAAA-AAAAA

- Old license key: ZZZZZ-ZZZZZ-ZZZZZ-ZZZZZ-ZZZZZ

### List current license installed

vracli license current

### Install new license

vracli license add AAAAA-AAAAA-AAAAA-AAAAA-AAAAA

### Remove old license

vracli license remove ZZZZZ-ZZZZZ-ZZZZZ-ZZZZZ-ZZZZZ

### Reboot the appliance to apply the license change

rebootWrap-up

I think this covers this small blog about changing the vRealize Automation 8 product license on a running system because there was no procedure available in the official documentation. I have not tested this procedure yet on a clustered deployment with three vRealize Automation 8 appliances. This might behave differently.

Be aware: I have tested this procedure on vRealize Automation 8.0.1 Hot Fix 1. The result may defer on another hotfix or version because of the ongoing product evolution.

Thanks for reading this blog and see you next time!